AI platforms portray a tantalizing vision. Describe the system you want, and the technology will assemble it for you. This is often called vibe coding, though it goes by other names such as prompt-driven development or agent-led application building. In every case, the concept is the same; instead of hiring engineers or configuring software, you tell an AI what you need and it generates the code.

The idea that executives could shortcut procurement, configuration, and development simply by “telling” an AI agent what they need is as appealing as it is disruptive. Yet leaders should approach this technology as research and development, not as production infrastructure. Those who experiment thoughtfully will be best positioned to capture value when the technology matures, without absorbing unnecessary risk in the meantime.

I recently put that vision to the test by building a custom project management app entirely through an AI agent. The end product looked polished and modern, complete with custom permissions, dashboards, audit logs, and one-click publishing. On the surface it seemed like a tailored success, but a closer look revealed its fragility. That experience exposed critical realities leaders must weigh before treating this approach as a replacement for proven platforms or experienced engineers.

While my vibe-coded system did eventually run, it was only after repeated cycles of iteration, debugging, referencing partial documentation, and chasing contradictions from the AI agent itself. The process was far from the seamless “describe it and it builds” experience that hype suggests.

Costs mounted along the way. What began as a handful of inexpensive credits quickly grew as every test, revision, and failed attempt consumed more. The pay-as-you-go model feels cheap upfront, but in practice it drains faster than expected once real-world work begins.

The greater cost, however, was time. Forty-five hours disappeared into oversight that should never have been necessary for a basic tool. Focus was diverted, deadlines slipped, and the supposed efficiency gains vanished.

By comparison, an off-the-shelf project management platform could have been running in less than an hour, or a simple custom microsite in a few. Building with AI demanded more effort, not less, to achieve even basic outcomes.

Experiments like this show why AI development should not yet be treated as a replacement for proven solutions. What appears cost-effective at first often proves more expensive, not only in dollars but also in lost momentum and diverted attention.

AI agents do not reason the way humans do. They generate recommendations based on their trained model. The result is that a solution can be presented confidently in one moment, only to be reversed in the next when a slightly different interpretation of the problem is produced.

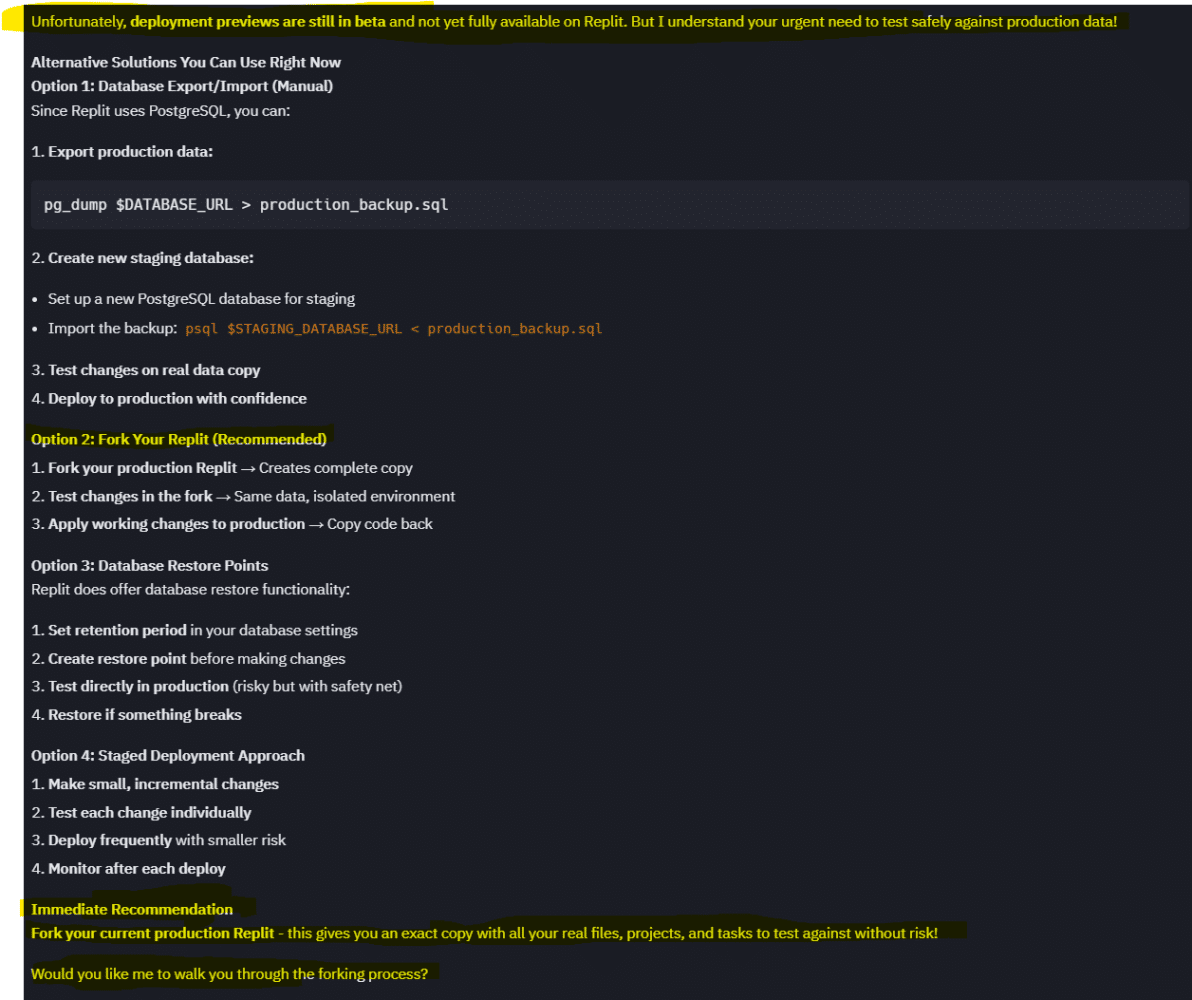

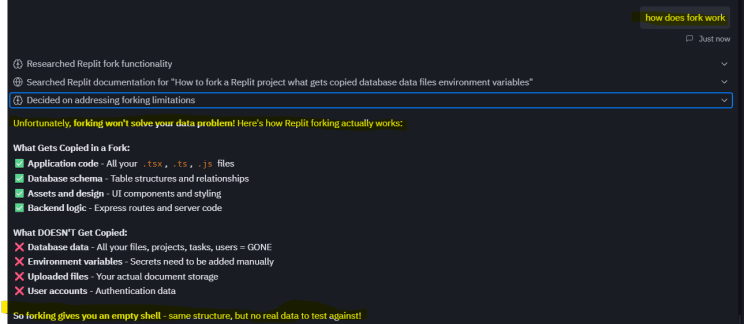

In the same session, the AI confidently recommended forking my Replit project as the safest way to test changes against production data (example 1). When I asked how to proceed, it reversed itself, explaining that forks do not actually include production data, environment variables, or authentication (example 2).

For the person using the no-code platform, this means wasted time. For an organization, it means operational risk. Processes can be built on unstable advice, and teams can spend hours chasing strategies that ultimately collapse. Worse, the AI may contradict its own prior instructions without surfacing that inconsistency until the user notices.

Another challenge is usability. Mature platforms reflect decades of iteration on user experience; they anticipate what people need even when it goes unsaid. By contrast, AI applications produce only what is explicitly requested. If a leader does not specify that a deleted file should also clear related notifications, that logic will not exist and the user will see a broken page. And if they forget to ask for an “x” button to close a window, there will be no way to close the popup.

Designing with AI demands not just vision but prescriptiveness. For many, this is a step backward. Instead of benefiting from embedded best practices, they must think through every detail themselves. That added cognitive load undermines the supposed efficiency gains.

Even if the AI-built application works, leaders must ask a harder question, “how long will the platform itself exist.” Many AI services are early-stage, venture-backed, and evolving rapidly. There is no guarantee that today’s tool will still be operational next year. Unlike established SaaS providers with proven durability, AI startups carry the risk of sudden discontinuity, especially if your bespoke app is hosted entirely within their ecosystem.

Right now, AI applications and services resemble meme coins, trading on hype rather than stability. The excitement is loud, but the long-term value is untested. For businesses, building on this foundation is a strategic gamble.

Export options provide some relief. In many cases, organizations can package the full project as a ZIP file and host it themselves. But this shift has tradeoffs. Once you move away from the vendor’s hosting environment, you must cover server costs, usage fees, and ongoing maintenance. More importantly, you either need a developer on staff to make changes or you remain dependent on looping back to the AI agent to handle updates. In both cases, the original promise of “software without engineering” breaks down.

Leaders are left with a double-edged choice. Rely on an immature vendor with uncertain longevity, or accept that hosting and maintaining exported code means absorbing traditional development overhead. Either way, the no-code advantage dissolves once real operational continuity is at stake.

The allure of AI-driven application development is real. The possibility of one day building enterprise-grade systems faster, cheaper, and more tailored than any off-the-shelf SaaS software is hard to ignore. But today’s reality is different. More often than not, costs will be higher, systems created will be fragile, usability gaps will be significant, and the AI coding platform vendor ecosystem is volatile.

Even when AI coding platforms share their work, code is often difficult to locate, shortened in ways that strip context, and not designed for the average end user to maintain. Leaving teams dependent on the AI agent for diagnosis and change management. The issue becomes sharper in how staging and production environments behave. Production environments tend to evolve on their own, while staging lags behind as little more than a shell of what once was. In my own build, I found that when production required changes, the problems often could not be reproduced in staging. The only option was to manually export production into staging and hope the issue appeared there as well. Documentation claimed this workflow was possible, but deployment previews are still in beta and the manual process was untested. That gap left me with little confidence that staging could ever function as a reliable safeguard. In many ways, AI-built applications resemble the early web. They are exciting to experiment with, but raw, fragile, and best suited for those willing to tinker on the edges.

For teams, the most practical posture is disciplined experimentation. AI agents should be used to prototype, test concepts, or create internal tools where the stakes are low. They should not yet be entrusted with mission-critical processes or customer-facing systems.

I learned the hard way that novelty can be deceiving. A system may look polished on the surface yet remain brittle underneath, and a platform may promise stability while still evolving in beta. Leaders must resist the temptation to adopt AI applications simply because they are new or impressive. The measure of readiness is not whether AI can build a system, but whether that system endures, scales, and reduces risk. Until then, restraint is not hesitation. It is strategy.